This is the sixth post in our series on Real-time Data Visualization.

Choosing a real-time charting solution can be confusing with the plethora of options available. There are many different approaches, technologies, tools, and terms to keep in mind. However, before we discuss choosing a visualization tool, it’s important to understand of how visualization fits into the larger system it’s part of.

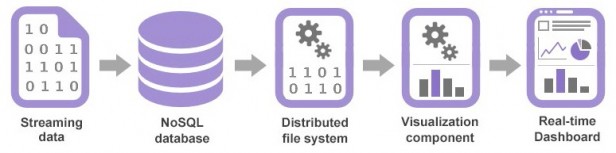

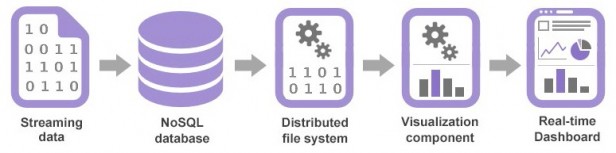

To this end, I encourage you to also glance through the previous post in this series titled ‘How Real-time Analytics Works – A Step-by-step Breakdown.’ It gives you a broad overview of all the components in a real-time analytics system, from the initial data capture to final visualization. For your reference, here’s an image that outlines the process:

On a related note, when discussing real-time analytics, an unavoidable term that you’d inevitably bump into is ‘latency.’ Wikipedia defines latency as the ‘time interval between the stimulation and response’ or ‘a time delay between the cause and the effect in the system being observed.’ Let’s look at the role latency plays in a real-time analytics system.

On a related note, when discussing real-time analytics, an unavoidable term that you’d inevitably bump into is ‘latency.’ Wikipedia defines latency as the ‘time interval between the stimulation and response’ or ‘a time delay between the cause and the effect in the system being observed.’ Let’s look at the role latency plays in a real-time analytics system.

On a related note, when discussing real-time analytics, an unavoidable term that you’d inevitably bump into is ‘latency.’ Wikipedia defines latency as the ‘time interval between the stimulation and response’ or ‘a time delay between the cause and the effect in the system being observed.’ Let’s look at the role latency plays in a real-time analytics system.

On a related note, when discussing real-time analytics, an unavoidable term that you’d inevitably bump into is ‘latency.’ Wikipedia defines latency as the ‘time interval between the stimulation and response’ or ‘a time delay between the cause and the effect in the system being observed.’ Let’s look at the role latency plays in a real-time analytics system.

Table of Contents

Nuances of Latency

All real-time business intelligence systems have some latency. The duration of the latency depends on various factors such as the volume and variety of data being collected, the speed at which the connectors store and move data within the DBMS, the ability of the querying tools to run complex queries, and finally, on the visualization tool’s ability to transform the data into shapes. It is important to understand the nuances of latency, as the primary goal of a real-time business intelligence system is to minimize the time between an event happening and an action being taken in response. Analyst Richard Hackathorn describes three types of latency:- Data latency: The time taken to collect and store the data

- Analysis latency: The time taken to analyze the data and turn it into actionable information

- Action latency: The time taken to react to the information and take action

4 important factors to consider when choosing a real-time charting solution:

1. Update & Refresh Intervals

Considering the sequence of updating a structured file (JSON/XML) first, and then refreshing the client, a real-time visualization component should be capable of extremely low update and refresh intervals. If the speed of the streaming data is faster than the visualization component can handle, the chart either loads weirdly, or doesn’t load at all. To get around this, charting components may suggest to aggregate data in longer time periods, and add a delay to the refresh interval. While some make do with this, the ideal solution is to have a component that’s capable of both update and refresh intervals as short as a second, and can handle extremely high volumes of streaming data.

2. Persistent Storing of Historical Data

While accessing immediate information is a given with real-time data visualization, another important requirement to consider is the viewing and analyzing of historical information. For example, when viewing a stock ticker, while a day trader would want to frequently check the changes in a particular stock, they may also want to go back a day or earlier to see the historic trend of the stock. In this case, the visualization component should not only plot real-time data, but also allow for loading of historical data points. Charting solutions address this using a history buffer, which is based on a particular number of historical data points, or a historical time range. This historical data facilitates a richer analysis, and more informed decisions.

3. Large Number of Data Sets

In social media monitoring apps that track hundreds of thousands of mentions of a brand across diverse sources, the volume of data captured is enormous. This takes tremendous processing power on the part of the visualization component to keep up with the pace of updates. It becomes imperative to be able to pass a large number of data points per update. This will ensure your data-heavy dashboard doesn’t break under the load.

D. Deb

December 12, 2013, 11:47 amVery nice and interested read